Part 1 – Parallel Processing

Previous research conducted by Capacitas has shown that the conversion rate of a web site starts to degrade when load times of web pages exceeds 2 seconds. Users tend to leave a web site if they’re waiting for longer than 2 seconds for pages to load. Why would a web page take longer than 2 seconds to load? The answer is often found in its code.

Bad code does often lead to bad performance!

Such a high demand for instant results means that the code behind the web pages needs to be optimised to make it as quick as is humanly possible. While there is more to code optimisation than merely speeding up the execution of a web page and its functions, from a business view point, speeding up the execution will remove one of the key factors behind a customer leaving a web site, and may well lure customers from competitor sites.

(Source: https://community.semrush.com/uploads/media/25/33/2533f309261402561981514398e61325.jpg)

There are a whole host of coding habits that can contribute to degradation in web page performance. Luckily, all habits can be addressed to provide a quick alternative, without changing the core functionality of the code.

Throughout this series of blogs, I’ll be looking at the following habits:

- Lack of Parallelism & Multi-Threading

- Over-reliance on server side code

- Poorly structured and expensive database queries

- Use of variables

In this part of the blog, I’ll be looking at the Lack of Parallelism and Multi-Threading. For the following habits, I shall be referring to and commentating on C# examples.

Lack of Parallelism & Multi-Threading

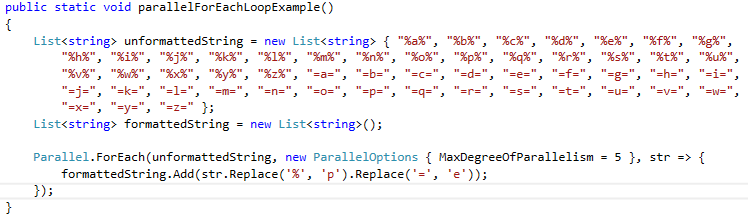

Where possible, using parallelism instead of simple For or For Each loops can substantially improve the speed of a page. The concept of parallelism is to run a number of tasks simultaneously, rather than one-by-one. This is done by firing off a user-defined number of threads (the MaxDegreeOfParallelism) which will execute the task using the C# terminology Parallel.ForEach.

Parallel.ForEach loops are particularly good for scenarios where we need to loop through hundreds or thousands of items, and execute some function with that item.

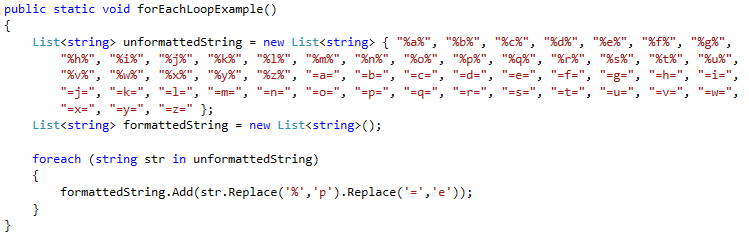

Using normal For loop:

Using Parallel.ForEach loop:

In terms of syntax, the two methods look the same. However, the Parallel.ForEach loop will loop through our list of unformattedString variables considerably quicker than the For loop.

However, using Parallel.ForEach loops isn’t always the best solution, and must be approached with caution. For example, if we need to loop through and aggregate or sums values, using a Parallel.ForEach loop will mess up the sums. Neither would Parallel.ForEach loops be beneficial on scenarios where we need to loop through a small number of items. They can also hinder – rather than help – performance if too many threads are being fired to execute processor-heavy operations.

Stay tuned for the second part of this blog, where I’ll be discussing how over-reliance on server-side code can affect performance.

Please check our new on demand Webinar about how to reduce risk for your website through capacity management.